Red Pools – Live at Blue Rinse 30-10-14

A fantastic audio/video recording by Gustav Thomas and Elvin Brandhi of a Red Pools set I performed for October’s Blue Rinse as part of Newcastle University Music Festival.

Probably the last set of it’s kind, performing material from all three current Red Pools releases. In addition to the music I designed a simple Max for Live patch which filtered the audio that was playing into three bands and turned the amplitude of the three bands into DMX RGB values, creating a very simple but effective light show which responded dynamically to the music using two LED lights, and this can be seen in the video.

RE/CEPTOR – TUSK Festival 2014

This is the video (taken by Harry Wheeler) from the TUSK Archive of the first of three performances by RE/CEPTOR at Blank studios.

RE/CEPTOR was a bespoke project for TUSK Festival comprised of long-established duo Trans/Human (with whom i’ve worked before), Contemporary dancer and movement artist Nicole Vivien Watson and myself.

The project is a multifaceted one. The three performances for TUSK festival involved myself and Nicole performing in BLANK studios, with Trans/Human performing in Marseille concurrently. The audio for Trans/Human’s performance was being streamed live to Blank Studios via NiceCast, an OSX internet radio streaming protocol, and their audio was then fed into the performance setup in Blank studios, more on this later. The performances happened on four speaker ‘surround’ sound.

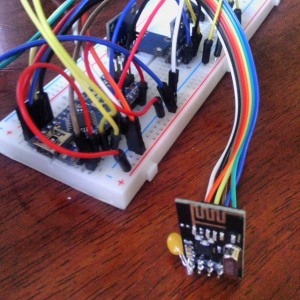

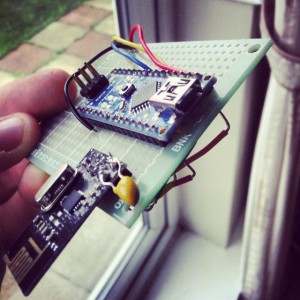

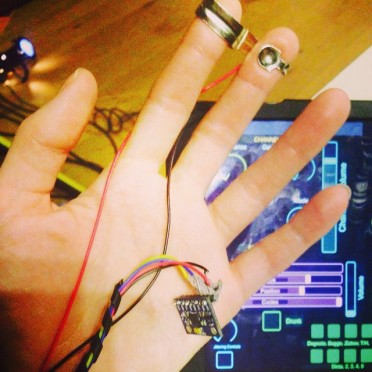

The main focal point for both of the performances was Nicole and her movement, I designed a custom wireless sensor array for her to wear through the performance which tracked light, body capacitance, temperature and movement. These sensors were attached to Nicole at relevant points (hands for movement, body capacitance on fingers and so on) read by an Arduino Nano, which collected the data and fed it wirelessly via an inexpensive nrf24l01 chip to another Arduino connected to my laptop which I created a shield for to house another nrf24l01 chip. This second Arduino received the data that Nicole was creating and was read by Max 6, and this data formed a very crucial aspect of the performance as a whole.

Here are some photos of the prototyping, building and wearing of the sensor

Top to bottom: Prototyped version of the sensor array, the Arduino and radio chip, the completed array, and one of Nicole’s hands showing an accelerometer and body capacitance sensors.

Once the data was into max 6 it was used to control a number of aspects of the performance. Nicole’s movement controlled effects on the stereo feed from Marseillie – changing filter poles, adding delay and decay to the feed. This effected stereo feed was broadcasted on two of the four speakers in the room, the ones in the back two corners. The other two speakers had two granular synthesisers playing, one of which was controlled by Nicole’s movement and one which was controlled by me. Nicole’s body temperature and body capacitance was also summed to create a low frequency sinewave drone which was played on all four speakers. Nicole’s data also controlled the strobe light via DMX which was the main source of light in the space. For the first performance (the one in the video) the strobe light frequency was a summation of body temperature, light and body capacitance, however after discussion with Nicole we felt that this obfuscated the relationship between her and the strobe, and we changed the program for the other two performances so that the strobe light was directly controlled by the position of one of her hands, which was much more effective.

All of this was tied together for me by a touchOSC interface which controlled various aspects of the parameters of what Nicole was effecting (unstable parameters such as filter resonance et cetera) which did not lend themselves to control by movement, as well as giving me control of one of the granular synthesisers, to do a kind of synthesiser duet with Nicole.

The result of this was four channels of sound, two coming from Marseillie and two from the room, but all were dependent on the movements of Nicole, locking all performers to a central groove. Trans/Human could not themselves hear what was going on in Blank studios, but in Blank we were very much improvising with their material. Being able to mesh all of us in this way was perfect – Trans/Human’s tour and the audio they collected and performed, my building/patching/sound generation mediated through Nicole’s movement. The binary state between darkness and light combined with full bodied four-channel sound created an immersive experience and environment for both performers and audience, and yielded, to my mind, three distinct, varied performances.

Thanks to Lee Etherington, Harry Wheeler and Hugs Bison for organisation and documentation, and thanks to the folks at Blank studios for being immensely helpful in accommodating our audio/internet needs.

Nicole is the creative director of Surface Area Dance Theatre –

http://www.surfacearea.org.uk/company.html

Trans/Human is Adam Denton and Luke Twyman –

http://transhuman.bandcamp.com/

Live Coding – 11th December 2014 – ICMuS Student Concert

For my Major Project this year I am developing the discipline of Live Coding using the SuperCollider programming language.

After practicing live electronic music for a few years i’ve decided to do this partly to perform using Open Source software, but also as live coding allows me to have a much more tactile approach to electronic performance. Previously my performances had been very predetermined, in the sense that most of the material I play was fixed with relatively little scope for determining the arc of the performance on the fly.

With live coding I can to a much greater degree merge electronic music performance and improvisation, by playing music determined by code and algorithms written live, it gives me scope to improvise performances, as well as using programmed randomness to perform electronic music that organically grows and can be edited on the fly, in real time

Recent Comments